OpenAI has made a groundbreaking move by launching a tool that attempts to distinguish human-written text from AI-generated text. The tool, called OpenAI AI Text Classifier, is trained on text from 34 text-generating systems, including OpenAI’s own ChatGPT and GPT-3 models.

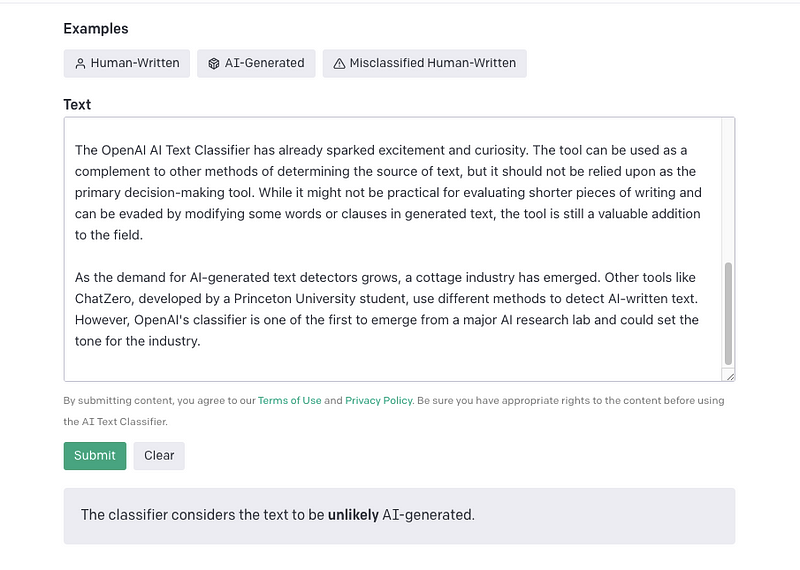

The OpenAI AI Text Classifier is a fascinating piece of architecture. Like ChatGPT, it is an AI language model trained and fine-tuned on a dataset of pairs of human-written text and AI-written text on the same topic. It has been fine-tuned to predict the likelihood of a piece of text being generated by AI. The classifier evaluates text based on its confidence level and labels it as “very unlikely” AI-generated, “unlikely” AI-generated, “unclear if it is” AI-generated, “possibly” AI-generated, or “likely” AI-generated.

It classifies the text into five categories based on the level of confidence in its assessment: “Very Unlikely” AI-generated with a probability less than 10%, “Unlikely” with a chance between 10% to 45%, “Unclear” with a probability of 45% to 90%, “Possibly” AI-generated with a 90% to 98% likelihood, and finally, “Likely” AI-generated with a confidence of over 98%.

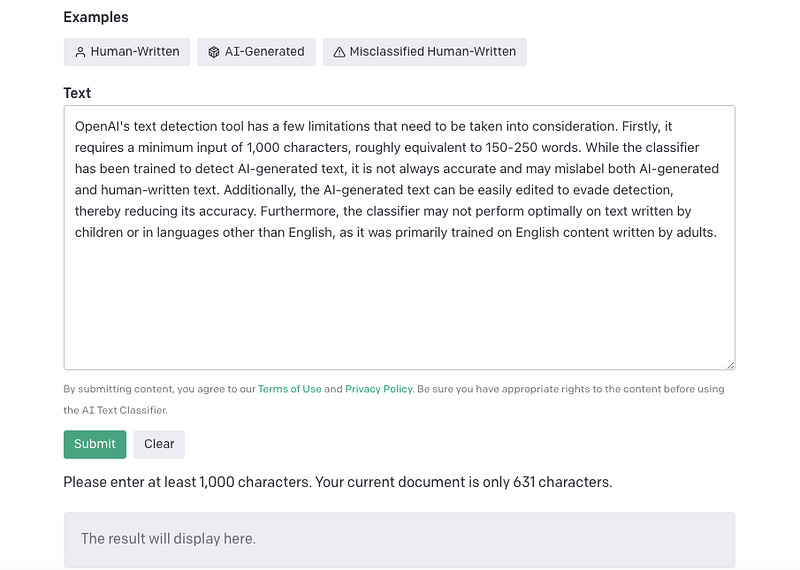

However, the classifier is not without limitations. It needs a minimum of 1,000 characters to work and doesn’t detect plagiarism. OpenAI notes that the classifier might be more likely to get things wrong with text written by children or in a language other than English due to its English-forward dataset. The classifier also can’t guarantee accuracy as it only has a success rate of 26%.

The OpenAI AI Text Classifier has already sparked excitement and curiosity. The tool can be used as a complement to other methods of determining the source of text, but it should not be relied upon as the primary decision-making tool. While it might not be practical for evaluating shorter pieces of writing and can be evaded by modifying some words or clauses in generated text, the tool is still a valuable addition to the field.

Limitations of AI Text Classifier

- At least 1,000 characters are necessary for the classifier to work correctly – that’s approximately 150–250 words.

- Despite this technology being able to make educated guesses on AI and human written texts alike, mislabeling is still a potential risk; since it‘s largely trained using English writing created by adults of all ages, mistakes can be made with non–English languages as well as kids‘ content.

- The more generic or uniform type of text like first five alphabets cannot be detected.

- Additionally its capability could decline when pushed outside training material due primarily to neural network based models not forming effective patterns away from those original datasets used during learning processes – OpenAI has noted situations where high confidence incorrect responses have been given despite inputs differently than any seen before.

As the demand for AI-generated text detectors grows, a cottage industry has emerged. Other tools like ChatZero, developed by a Princeton University student, use different methods to detect AI-written text. However, OpenAI’s classifier is one of the first to emerge from a major AI research lab and could set the tone for the industry.

The OpenAI AI Text Classifier is a step in the right direction, but it’s just the beginning. OpenAI is hoping to receive feedback on the tool and plans to share improved methods in the future. With the rapid growth of text-generating AI, it’s vital that the creators of these tools take steps to prevent their potentially harmful effects, and OpenAI is leading the way.

Howevere, the detection of AI-generated text is an ongoing battle, much like the pursuit between cybercriminals and security experts. As AI advancements in text generation continue, so will the development of detection tools. However, it’s important to note that these detection tools, as helpful as they may be, are not a foolproof solution. According to OpenAI, they should not be relied upon as the sole determining factor in deciding the authenticity of text. The issue of AI-generated text may never have a definite solution, much like the constant evolution of technology and the hurdles it presents.

Loading...